Active Object Recognition

It is argued that single-view object recognition is subject to many difficulties, mainly due to viewpoint-related ambiguities, occlusions and coincidences. Recognition which is active, that is, that has the ability to vary viewpoint according to the interpretation status, overcomes many of these difficulties. In the active approach, low-level image data is used to drive the sensor to a special viewpoint with respect to the object to be identified. From such a viewpoint, the three-dimensional object recognition problem is reduced to a two-dimensional pattern recognition problem. The system integrates several novel contributions.

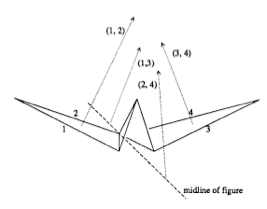

First, there is a requirement for robust tracking of image primitives from one sensor position to the next, for which a novel tracking algorithm is presented. The tracking is facilitated by the use of primitive-centred coordinate bases and local context for the description of image primitives. Each primitive P is described by the set of neighbouring primitives, expressed in a coordinate system based on P.

Second, we use a simple behaviour-based viewpoint control, in order to achieve the robustness necessary to reach a special view reliably. The behaviours are interesting in that they are driven primarily by the current image data, making little use of inference concerning the three-dimensional structure of the scene. Qualitatively, the three behaviours perform image-line-centering, image-line-following and camera-distance-correcting.

Third, we use probabilistic algorithms for efficient storage and retrieval of sets of feature vectors. This problem arises in many recognition problems, and in particular in the problem of recognizing the two-dimensional pattern imaged at each special view. Use is made of this error model to prune the search for the best model for a given query. The use of an error model also allows quantification of the degree of ambiguity in object identification.

Finally, we describe a method for selecting additional special views in the case in which there remains uncertainty in the identity of the object of interest from the first special view acquired.

The set of motor behaviors then were able to drive the camera system to search for these special views.

There are three interacting behaviors that move the camera relative to the surface of a hemisphere over the table, centred on the object group: tangentially, radially and rotation along the optical axis in place. There are additional behaviors of the x-y motion of the platform that are slaves to the needs of viewpoint selection.

A 1994 movie of the system in action is shown below. Its performance remains impressive even today. Note how the robot recognizes when ti can not reach a particular viewpoint and moves appropriately.

References

-

•Wilkes, D., Tsotsos, J., Integration of camera motion behaviours for active object recognition, Proc. International Association for Pattern Recognition Workshop on Visual Behaviors, p 10 - 19, Seattle, June 1994.

-

•Wilkes, D., Tsotsos, J., Behaviors for Active Object Recognition, SPIE Conference, p 225 - 239, Boston, Sept. 1993

-

•Wilkes, D., Tsotsos, J.K, Efficient Serial Associative Memory, CVPR'93, p701 -2.

-

•Wilkes, D., Tsotsos, J.K., Active Object Recognition, CVPR-92, Urbana, Ill, 1992 , p 136 - 141.

-

•D. Wilkes, S. Dickinson, and J.K. Tsotsos, Quantitative Modelling of View Degeneracy, Proceedings of the Eighth Scandinavian Conference on Image Analysis, Tromso, Norway, May 1993.

-

•Wilkes, D., Dickinson, S., Tsotsos, J., A quantitative analysis of view degeneracy and its use for active focal length control, International Conference on Computer Vision, Cambridge MA, June 1995.

-

•Wilkes, D., Dickinson, S., Tsotsos, J., A quantitative analysis of view degeneracy and its use for active focal length control, International Conference on Computer Vision, Cambridge MA, June 1995.

-

•Dickinson, S., Christensen, H., Tsotsos, J., and Olofsson, G., Active Object Recognition Integrating Attention and Viewpoint Control, Proceedings, Third European Conference on Computer Vision, Stockholm, May 2-6, 1994.

-

•Dickinson, S., Christensen, H., Tsotsos, J., Olofsson, G., Active object recognition integrating attention and viewpoint control, Computer Vision and Image Understanding 67-3, p239 - 260, 1997.

-

•Dickinson, S., Stevenson, S., Amdur, E., Tsotsos, J., Olsson, L.,Integrating Task-Directed Planning with Reactive Object Recognition, SPIE Conference, Boston, Sept. 1993.

-

•Tsotsos, J.K., Intelligent Control for Perceptually Attentive Agents: The S* Proposal, Robotics and Autonomous Systems 21-1, p5-21, July 1997.