Anomalous Behaviour Detection using Spatiotemporal Oriented Energies, Subset Inclusion Histogram Comparison and Event-Driven Processing

Contributors

- Andrei Zaharescu

- Richard P. Wildes

Overview

This work presents a novel approach to anomalous behaviour detection in video. Detection of anomalous behaviour relative to some model of expected behaviour is a fundamental task in surveillance scenarios. The approach is comprised of three key components. First, distributions of spatiotemporal oriented energy are used to model behaviour. This representation can capture a wide range of naturally occurring visual spacetime patterns and has not previously been applied to anomaly detection. Second, a novel method is proposed for comparing an automatically acquired model of normal behaviour with new observations. The method accounts for situations when only a subset of the model is present in the new observation, as when multiple activities are acceptable in a region yet only one is likely to be encountered at any given instant. Third, event driven processing is employed to automatically mark portions of the video stream that are most likely to contain deviations from the expected and thereby focus computational efforts. The approach has been implemented with real-time performance. Quantitative and qualitative empirical evaluation on a challenging set of natural image videos demonstrates the approach's superior performance relative to various alternatives.

Challenges

- Model a wide range of complicated behaviours.

- Detect fine deviations from acquired models.

- Exhibit robustness to insignificant changes (e.g., illumination effects and difference between differently appearing people performing same action).

- Realize computations in compact and efficient implementations.

Why Spatiotemporal Oriented Energy Features?

There are a number of advantages of using the proposed oriented energy feature set for anomalous behaviour:

- The features are discriminative even in significant

clutter, as integrated spatial and temporal, multiscale,

orientation analysis reveals distinctive signatures.

- The representation is invariant to substantial

illumination changes, as oriented energies can be normalized

to become invariant to local image contrast.

- The representation is invariant to purely spatial appearance and thereby different actors performing the same behaviour, as oriented energies can be marginalized for spatial structure.

- Computation of oriented energies involves only 3D

separable convolution and pointwise nonlinear operations,

hence making them amenable to compact, efficient

implementation.

Oriented Energy Computation

Events in a video sequence will generate diverse structures

in the spatiotemporal domain. For instance, a textured,

stationary object produces a much different signature in image

space-time than if the same object were moving. One method of

capturing the spatiotemporal characteristics of a video

sequence is through the use of oriented energies. These

energies are derived using the filter responses of orientation

selective bandpass filters when they are convolved with the

spatiotemporal volume produced by a video stream. Responses of

filters that are oriented parallel to the image plane are

indicative of the spatial pattern of observed surfaces and

objects (e.g., spatial texture); whereas, orientations that

extend into the temporal dimension capture dynamic aspects

(e.g., velocity and flicker).

For this work, filtering was performed using broadly tuned,

steerable, separable filters based on the second derivative of

a Gaussian and their corresponding Hilbert transforms.

Filtering was executed across seven orientations and five

scales using a Gaussian pyramid formulation. Hence, a measure

of local energy, E, can be computed according to

- G2 are Gaussian second derivative

filters

- H2 are the corresponding Hilbert

transform filters

- θ is the 3D orientation at which filtering is

being performed

- σ is the scale at which filtering is being

performed

- x = ( x, y, t ) corresponds

to spatiotemporal image coordinates

- Ι ( x )

is the image sequence

To attain a purer measure of energy that is more robust to

illumination changes, normalization is performed, according to

where ε is a bias term to avoid

instabilities when the energy where content is small.

In addition, the filter outputs are marginalized for appearance (for more details,see the paper), in order to attain invariance to purely spatial appearance.

Model Acquisition, Maintenance and Comparison

- Both model and newly acquired video observations are given as pixelwise distributions (histograms) of marginalized appearance, intensity normalized spatiotemporal oriented energies, as described above.

- Event driven processing focuses operations on scene dynamics by only entering video information into initial model construction, ongoing model update and new observation construction when the video shows significant local non-static energy.

- A new histogram comparison method is introduced, based on Χ2, that incorporates the idea of subset inclusion: the current observation can be a subset of the already acquired model of normal behaviour. By allowing for such partial matches, complicated models that might allow for multiple behaviours (e.g., both leftward or rightward motion) can be matched even if a single observation yields only one component.

Results

Both hardware and software based implementations of the described approach have been developed. A 120 core GPU implementation executes at 5 ms/frame in 320 x 240 images. Results are presented below, emphasizing three main aspects of the method: the representation, the histogram comparison and the event driven processing.

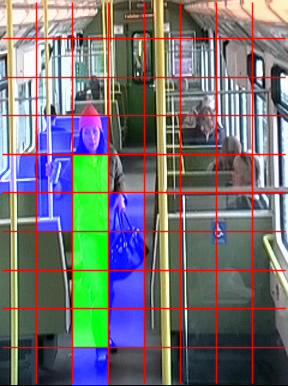

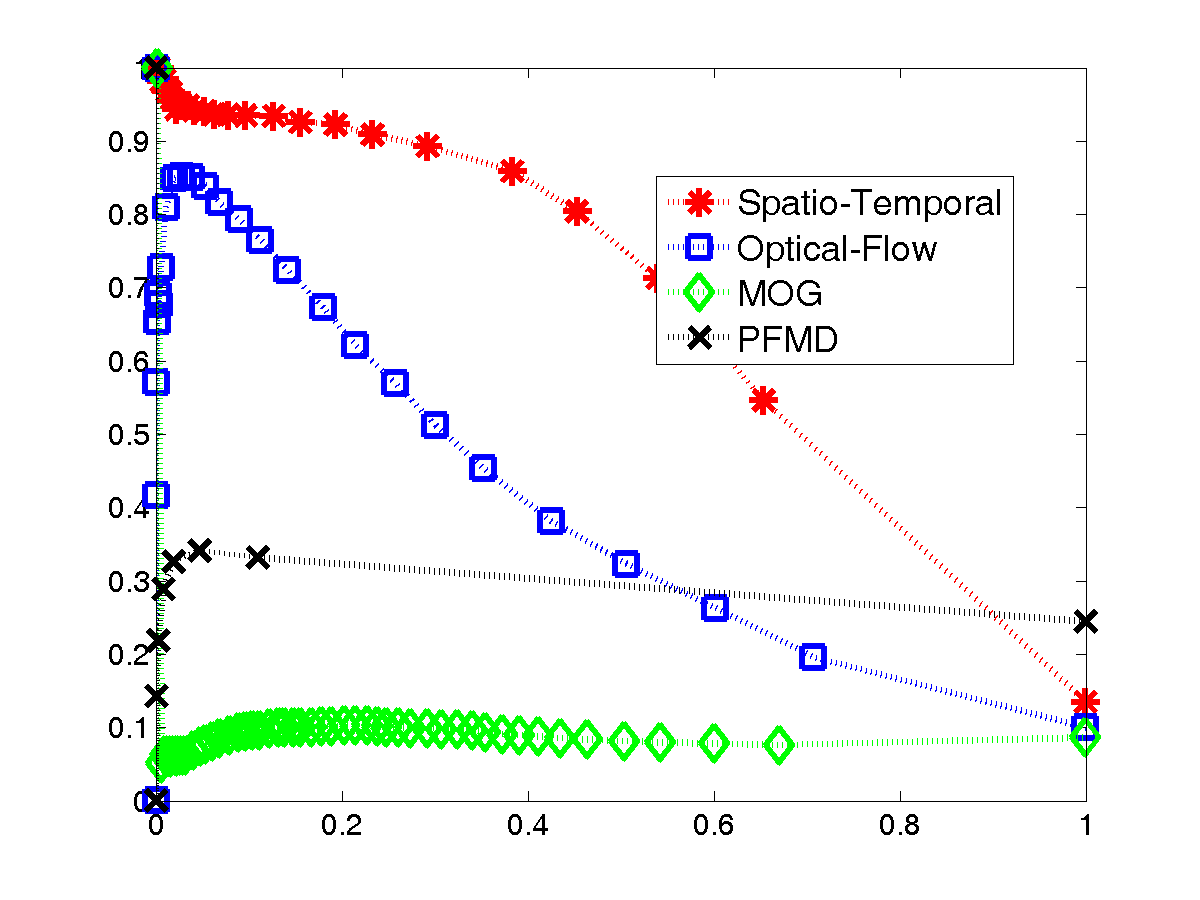

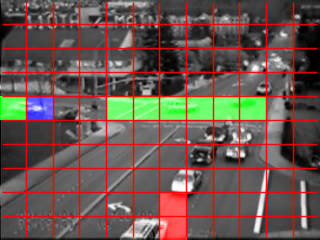

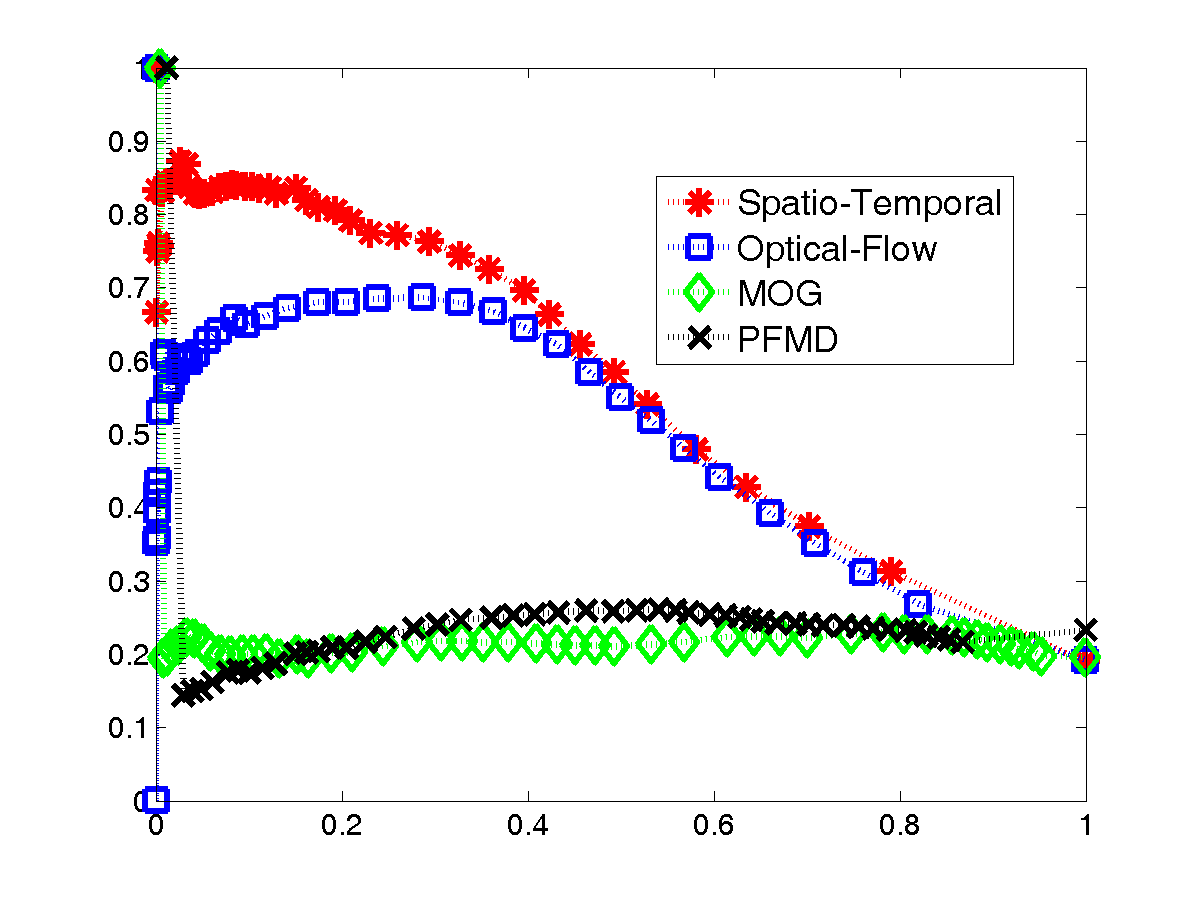

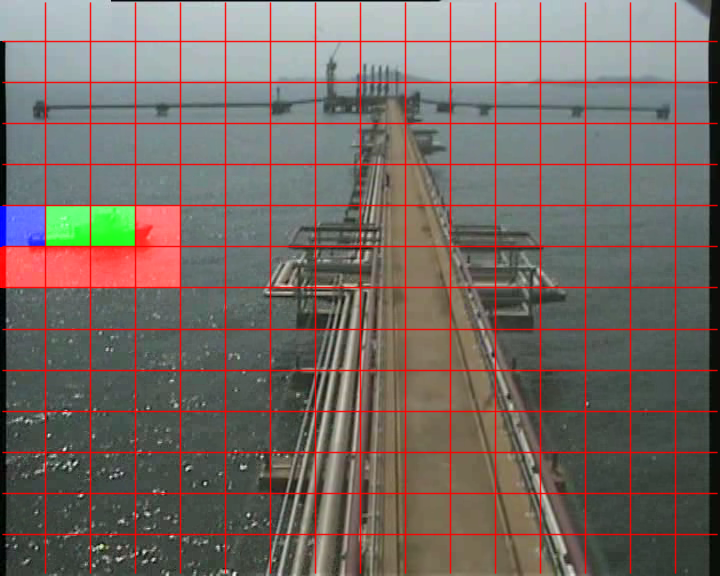

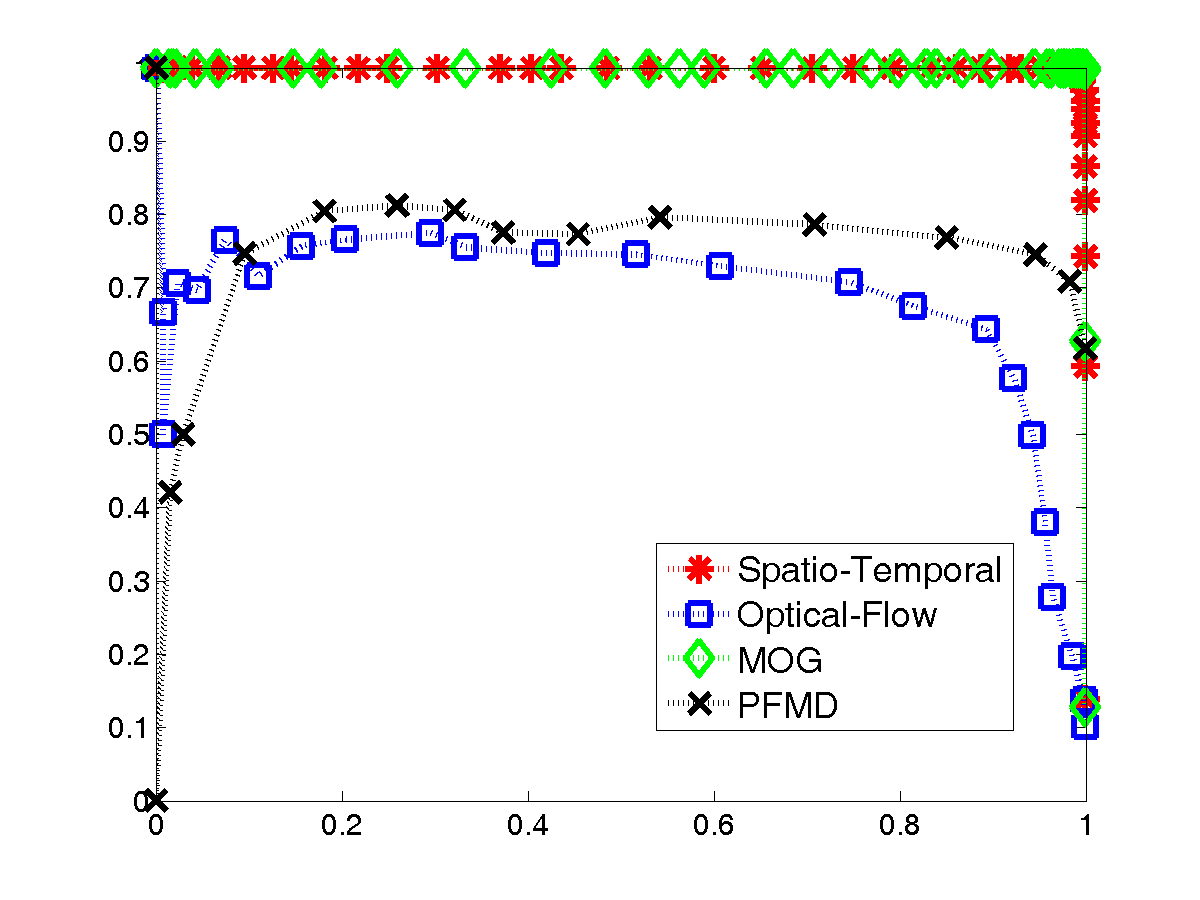

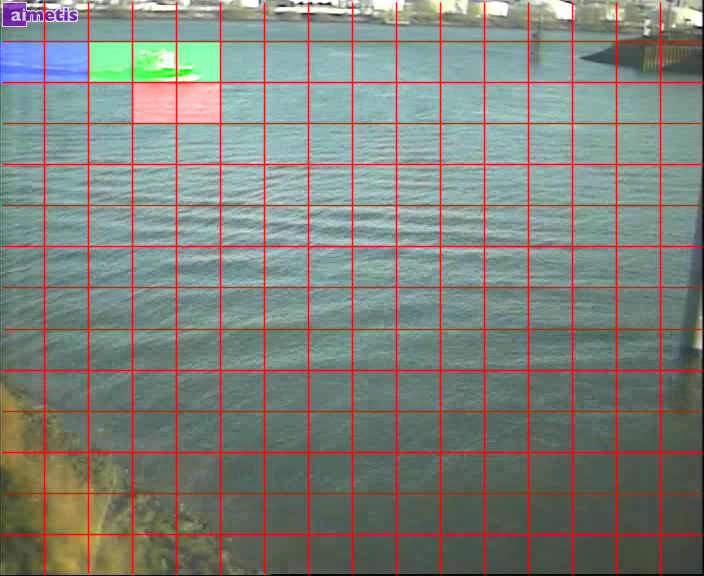

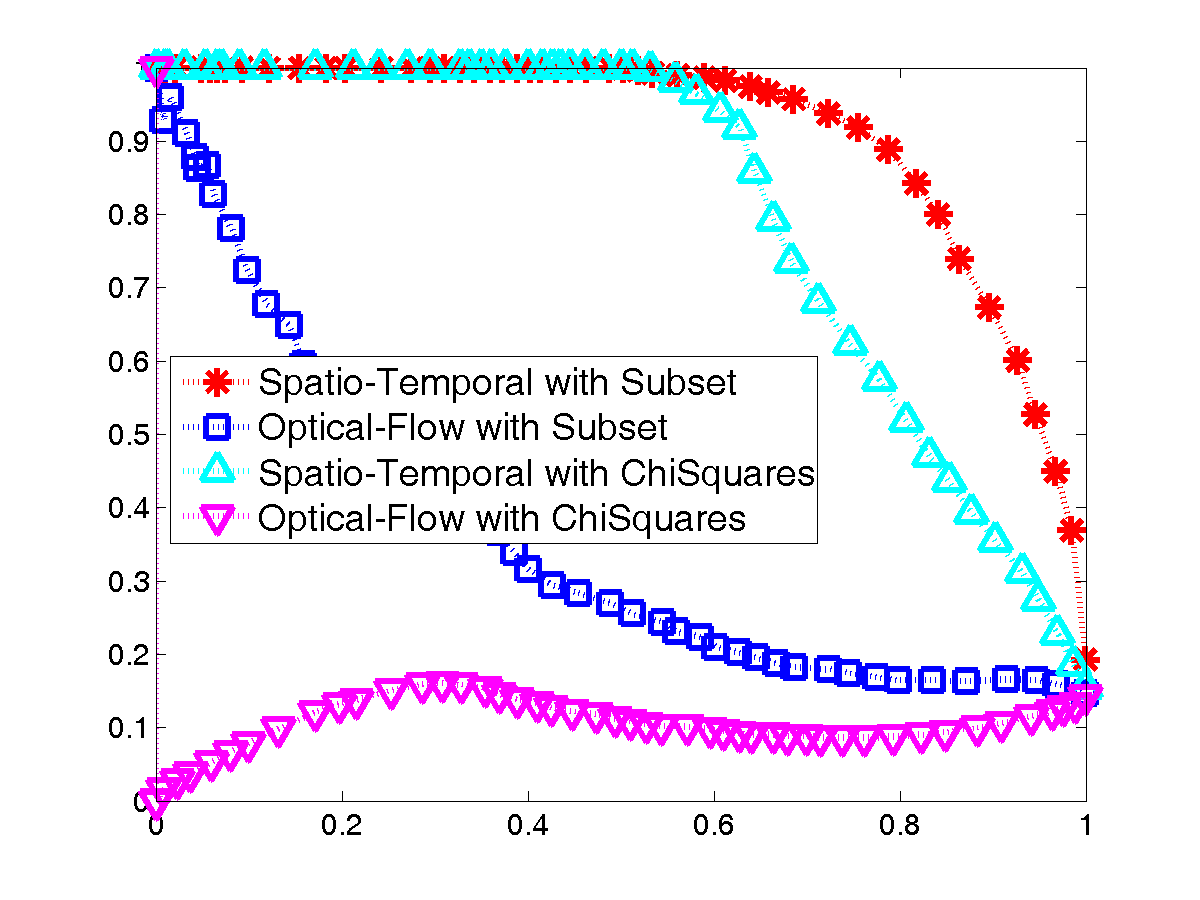

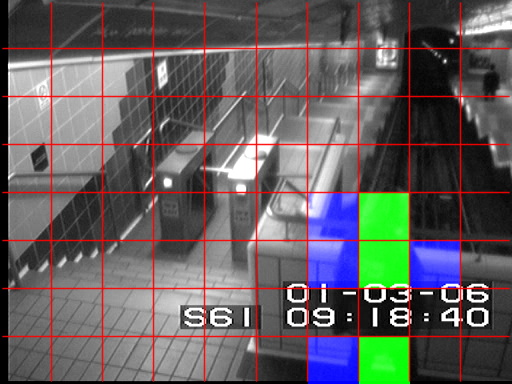

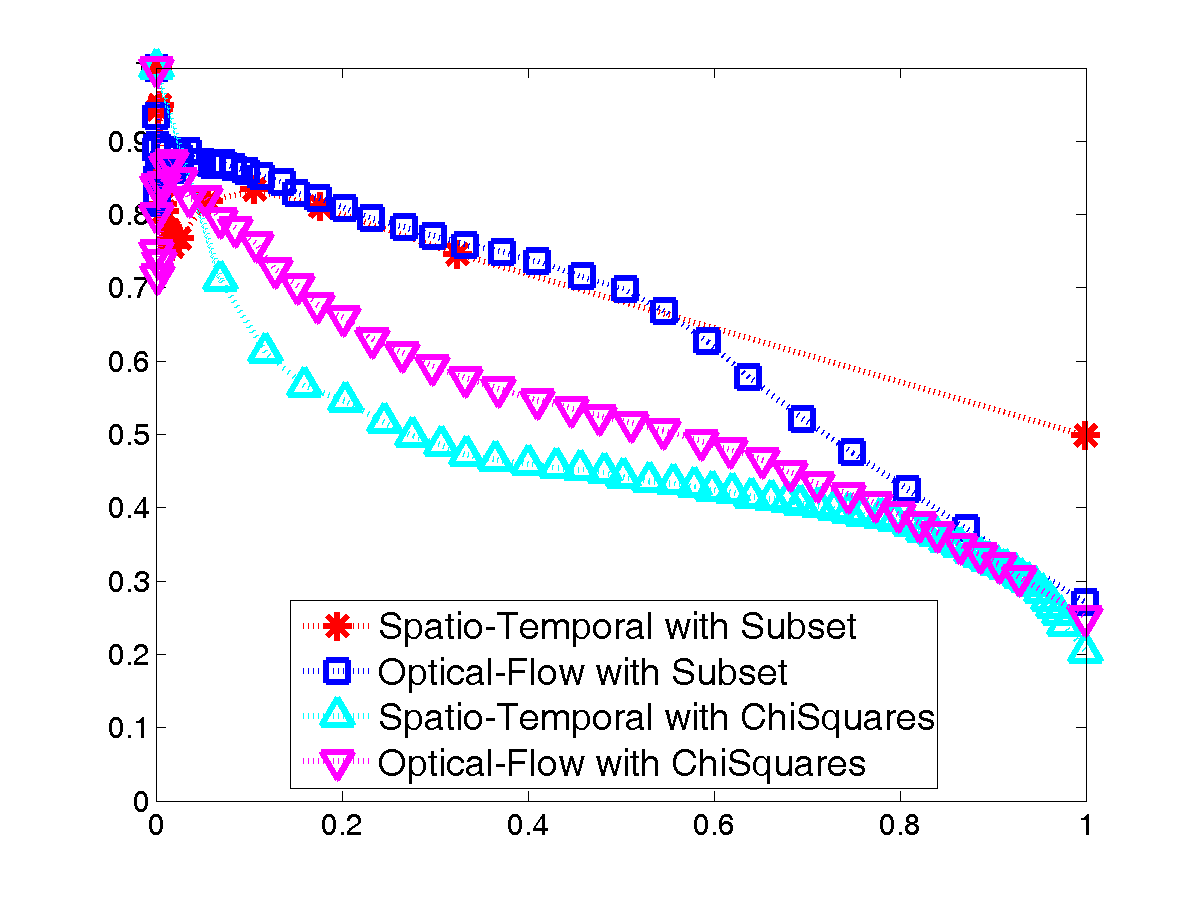

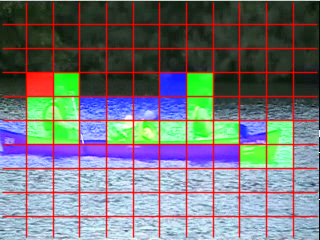

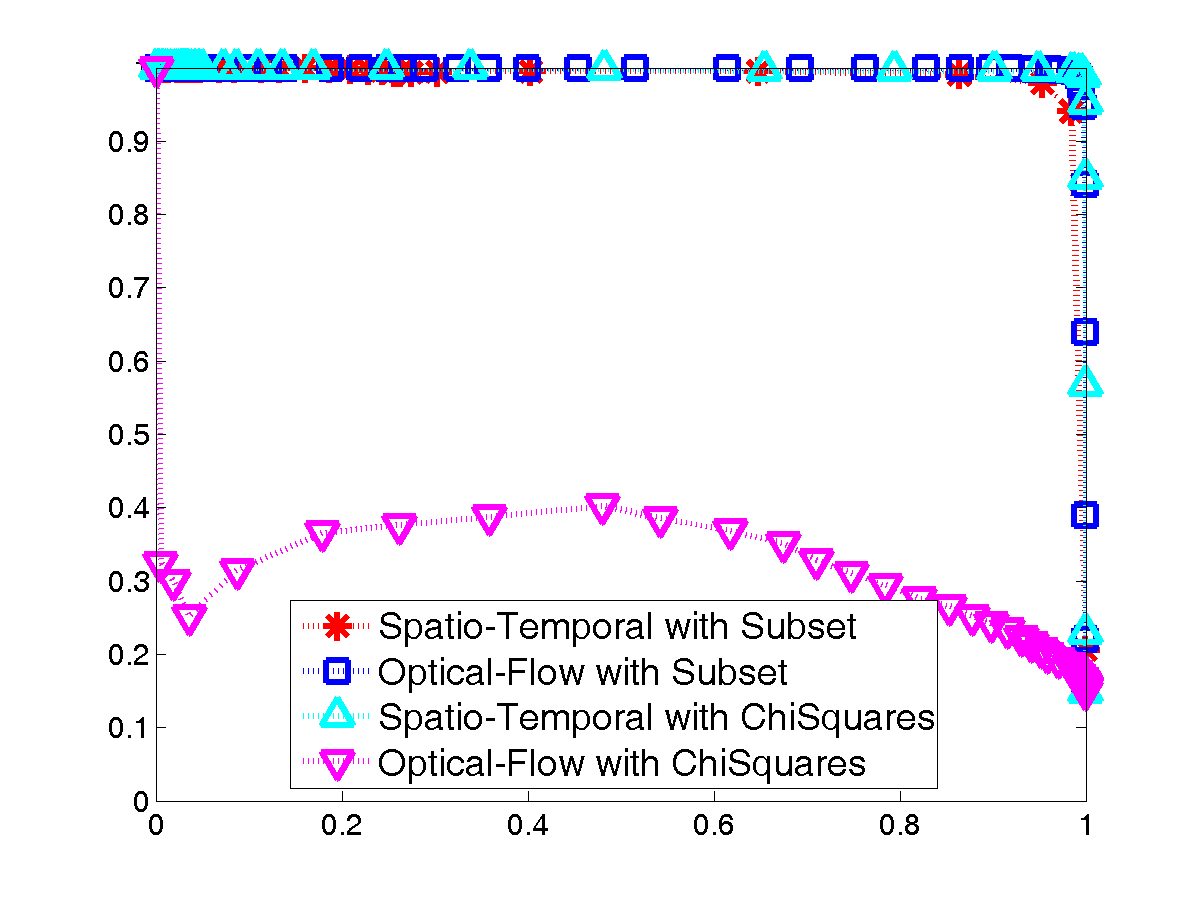

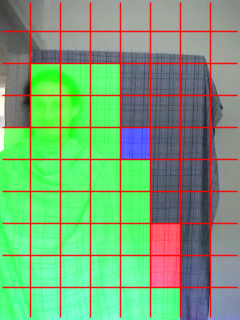

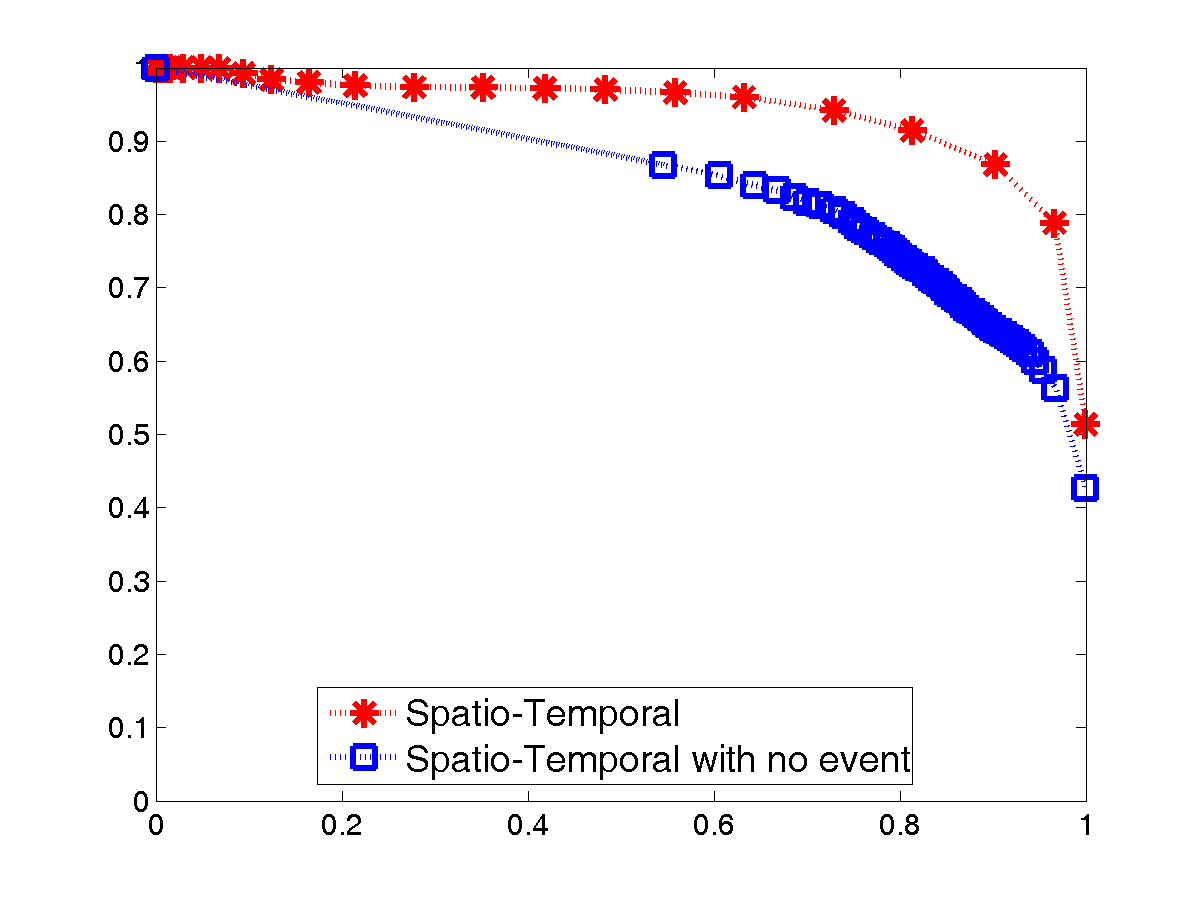

In the following examples, the first column shows a frame during the evaluation of the proposed method, using the manually marked groundtruth information. The Colour coding is: green - true positive; red - false positive; blue - false negative. The second column presents the Precision/Recall curves (abscissa- Recall; ordinate - Precision), with each curve containing 20 measurements.

Representation

The following examples show the advantages of using the spatio-temporal representation versus other representations, such as: Optical-Flow, Mixture of Gaussian background model, PFMD (Percentage Frames Motion Detected).

|

|

Title: Train

Desc: Very challenging train sequence due to drastically varying lighting conditions and camera jitter. Abnormalities: People movement.

|

|

|

Title: Belleview

Desc: Cars moving through an intersection. Model construction during day; testing continuing through night. Abnormalities: Cars entering thoroughfare from left or right.

|

|

|

Title: Boat-Sea

Desc:A sea-boat is passing by (motion on motion). Abnormalities: Boat movement.

|

Histogram Comparison

The following examples show the advantages of using the newly proposed subset inclusion histogram method versus Χ2.

|

|

Title: Boat-River

Desc: Boat passing by on a river (motion on motion). Abnormalities: Boat movement.

|

|

|

Title: Subway-Exit

Desc: Surveillance camera observing pedestrians at a subway exit. Abnormalities: Wrong way motion (leftward and downward).

|

|

|

Title: Boat-Canoe

Desc: A canoe is passing by (motion on motion); also, some wind-blown foliage in background. Abnormalities: Canoe movement.

|

Event Driven Processing

The following example shows the advantages of using an event-based update scheme.

|

|

Title: Camouflage

Desc: A person in camouflage walking. The right motion is learnt as the normal behaviour. There is a large pause in the middle, to illustrate event based processing. Abnormalities: Left motion.

|

Additional data and results are available at our data set page.

Supplemental Material Supplemental video of results from ECCV 2010.

Related Publications

- A. Zaharescu and R.P. Wildes, Anomalous Behaviour Detection using Spatiotemporal Oriented Energies, Subset Inclusion Histogram Comparison and Event-Dirven Processing, ECCV, 2010.

- K.G. Derpanis and R.P. Wildes, Dynamic Texture Recognition based on Distributions of Spacetime Oriented Structure, CVPR, 2010.

- K. Derpanis and J. Gryn, Three-dimensional nth derivative of Gaussian

separable steerable filters. ICIP 3, pp.553-556, 2005

|