Visual Tracking using a Pixelwise Spatiotemporal Oriented Energy Representation

Contributors

- Kevin Cannons

- Jacob Gryn

- Richard Wildes

Results from the proposed algorithm on the sylvester video sequence. Challenging aspects of this video include: fast erratic motion, out-of-plane rotation/shear, and illumination changes. Original source: Ross et al., IJCV, 2008.

Overview

This work addresses the problem of visual tracking. More specifically, this work presents a novel pixelwise representation for tracking that models both the spatial structure and dynamics of a target in a unified fashion. The representation is derived from spatiotemporal energy measurements that capture underlying local spacetime orientation structure at multiple scales. The proposed target representation is extremely rich, including appearance and motion information as well as information about how these descriptors are spatially arranged. The richness and robustness of the proposed representation leads to superior tracker performance over current state-of-the-art methods.

Challenges of Visual Tracking

- Illumination effects (e.g., shadows, changes in ambient lighting).

- Scene clutter (e.g., objects in background, other moving scene objects).

- Changes in target appearance. Can be caused by geometric deformations (e.g.,

rotations), the addition or removal of clothing, and changing facial expressions.

- Abrupt changes in target velocity.

- Occlusions (e.g., self occlusions, occlusions from other scene objects).

- Simultaneously tracking multiple targets with similar appearance.

- Computation efficiency.

Why a Pixelwise Spatiotemporal Oriented Energy Representation?

The choice of representation is key to addressing the above challenges. By construction, spatiotemporal energies provide a uniform and natural solution to the aforementioned challenges. In more detail, the energies are well-suited for visual tracking applications for several reasons, including:

- A rich description of the target is attained. The oriented energies encompass

both target appearance and dynamics, allowing for a tracker that is more robust to clutter.

- The oriented energies are robust to illumination changes.

- The energies can be computed at multiple scales, allowing for a multiscale analysis of the target attributes.

- The representation is efficiently implemented via linear and pixelwise non-linear operations, with amenability to real-time realizations on GPUs.

- The pixelwise measurements maintain the notion of spatial organization within the target region that is lost under some representations (e.g., histograms).

Approach

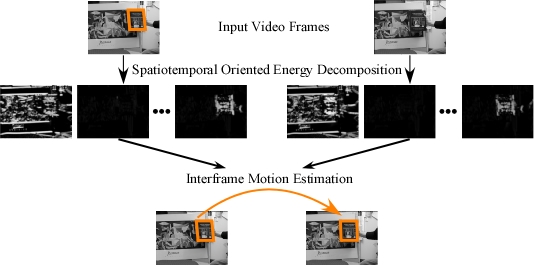

An overview of the proposed approach to visual tracking is illustrated in the figure below.

Overview of visual tracking approach. (top) Two frames from a video where a book is being tracked. The left image is the first frame with a crop box defining the target's initial location. The right image is a subsequent frame where the target must be localized. (middle) Spacetime oriented filters decompose the input into a series of channels capturing spatiotemporal orientation; left-to-right the channels for each

frame correspond roughly to horizontal static structure, rightward and leftward motion.

(bottom) Interframe motion is computed using the oriented energy decomposition.

The spatiotemporal oriented energy representation, as seen in the system diagram above, can be obtained by applying orientation selective bandpass filters to the spatiotemporal volume representation of a video sequence. This work uses broadly tuned 3D Gaussian second derivative filters and their Hilbert transforms due to their amenability to efficient computation. Note how the representation uniformly encompasses the appearance and dynamic characteristics of the book through the various spatiotemporal oriented energy channels.

Interframe motion estimation is achieved using a pixelwise template warping approach. Under such an approach the template is matched to the current frame of the image sequence so as to estimate and compensate for the interframe motion of the target. In the present work, both the template and the current image frame are represented in terms of oriented energy measurements. Therefore, estimation of the target interframe motion proceeds by independently matching feature measurements for all energy channels.

Results

In this work, two main experiments were performed on several challenging video sequences. In the first experiment, the proposed spatiotemporal oriented energy features were compared directly against two alternative feature sets. Qualitative and quantitative results illustrate that the proposed features significantly outperformed the alternatives. In the second experiment, the proposed tracking system was compared against several state-of-the-art trackers and was shown to attain generally superior performance. Samples of our tracking results are shown in the videos below. Comparative video results for the entire test set can be viewed in the supplemental material.

Results from the proposed algorithm on the Pop Machines video sequence. Challenging aspects of this video include: targets with similar appearance and crossing trajectories, low quality surveillance video, harsh lighting, and full occlusions from the central pillar. Original Source: Cannons et al., ECCV, 2010.

Results from the proposed algorithm on the Occluded Face 2 video sequence. Challenging aspects of this video include: in plane rotation, cluttered background, significant appearance change via the addition of a hat, as well as partial occlusions from the book and hat. Original source: Babenko et al., CVPR, 2009.

Additional data and results are available at our data set page.

Supplemental Material

Supplemental video of tracking results from ECCV2010 (requires DIVX, 50 megs).

Related Publications

- K. Cannons and R.P. Wildes, The applicability of spatiotemporal oriented energy features to region tracking, PAMI 36 (4), 784-796, 2014.

- K. Cannons, J.M. Gryn and R.P. Wildes, Visual Tracking using a Pixelwise Spatiotemporal Oriented Energy Representation, European Conference on Computer Vision (ECCV), 2010, pp. 511-524

- K.J. Cannons, A Review of Visual Tracking, York University Technical Report CSE-2008-07, September 16, 2008.

- K.J. Cannons and R.P. Wildes, Spatiotemporal Oriented Energy Features for Visual Tracking, ACCV, pp.532-543, 2007.

- B. Babenko, M.H. Yang, and S. Belongie, Visual Tracking with Online Multiple Instance Learning, CVPR, pp.983-990, 2009.

- D.A. Ross, J. Lim, R.S. Lin, and M.H. Yang, Incremental Learning for Robust Visual Tracking, IJCV 77, pp.125-141, 2008.

|