|

|||||||

Natural scene classification is a fundamental challenge in the goal of automated scene understanding. By far, the majority of studies have limited their scope to scenes from single image stills and thereby ignore potentially informative temporal cues. This work is concerned with determining the degree of performance gain in considering short videos for recognizing natural scenes. Towards this end, the impact of multi-scale orientation measurements on scene classification is systematically investigated, as related to:

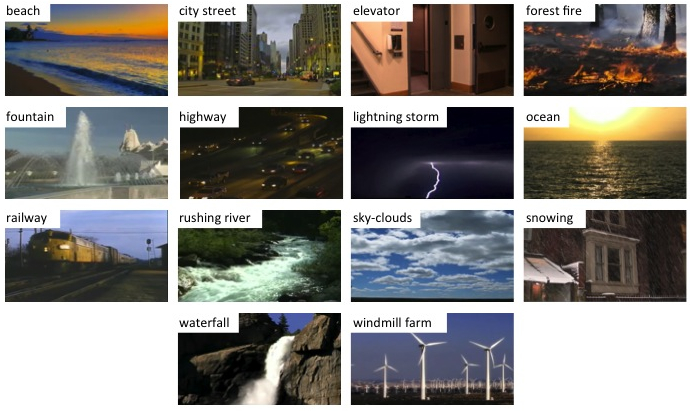

In addition, a new

data set (YUPENN Dynamic Scenes) is

introduced that contains 420 image

sequences spanning fourteen scene

categories, with temporal scene

information due to objects and surfaces

decoupled from camera-induced ones. This

data set is used to evaluate

classification performance of the various

orientation-related representations, as

well as state-of-the-art alternatives. Approach

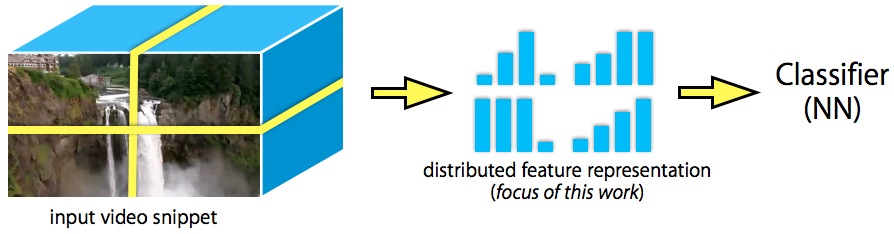

There are two key parts to the analysis

of dynamic scene recognition considered in

this work (see Approach overview

above):

ResultsEvaluation

was conducted on two data sets: (i)

Maryland "In-the-Wild and (ii) the new YUPENN Dynamic

Scenes. Since the Maryland

data set contains large camera motions and

scene cuts, it is difficult to understand

whether classification performance of

approaches depends on their success in

capturing underlying scene structure vs.

characteristics induced by the

camera. To shed light on this

question, the YUPENN

dataset is introduced which contains

a wide variety of dynamic scenes captured

from stationary cameras. It is found

that spacetime oriented energies designed

to capture both spatial appearance and

temporal dynamics are the best performer

on the stabilized data set. Overall,

the spacetime oriented energy feature

consistently characterizes dynamic scenes

whether operating in the presence of

strictly scene dynamics (stabilized case)

or when confronted with overlaid,

non-trivial camera motions (in-the-wild

case). The alternative approaches

considered are less capable of such wide

ranging performance.

Supplemental Material

Related Publications

|

|||||||

|