The Selective Tuning Model

The Selective Tuning model (ST) was first described in my Behavioral and Brain Sciences paper of 1990. The theoretical foundations for it appeared in my ICCV 1987 and IJCAI 1989 papers. The first papers to describe the mechanisms that realize it appeared in a 1991 University of Toronto Technical Report and a book chapter in the 1993 volume Spatial Vision in Man and Machine, and the first implementation was described in our 1995 Artificial Intelligence Journal paper, where the name ‘Selective Tuning’ also first appeared.

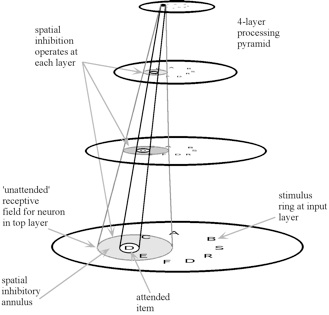

Let us see how Selective Tuning deals with the common attentional mechanism of spatial selection. The following was first presented in our 1995 Artificial Intelligence Journal paper.

Selection relies on a hierarchy of winner-take-all (WTA) processes. WTA is a parallel algorithm for finding the maximum value in a set. First, a WTA process operates across the entire visual field at the top layer: it computes the global winner, i.e., the units with largest response. The WTA can accept guidance for areas or stimulus qualities to favour if that guidance was available but operates independently otherwise. Our WTA is defined to find strongest regions of response, not only points. The search process then proceeds to the lower levels by activating a hierarchy of WTA processes. The global winner activates a WTA that operates only over its direct inputs. This localizes the largest response units within the top-level winning receptive field. Next, all of the connections of the visual pyramid that do not contribute to the winner are pruned. This strategy of finding the winners within successively smaller receptive fields layer by layer in the pyramid and then pruning away irrelevant connections is applied recursively through the pyramid. The end result is that from a globally strongest response, the cause of that largest response is localized in the sensory field at the earliest levels. The paths remaining may be considered the pass zone while the pruned paths form the inhibitory zone of an attentional beam. The WTA does not violate biological connectivity or time constraints.

Attention is not a single mechanism as so many, especially in computer vision, seem to insist on believing. Attention is a set of mechanisms that help optimize the search processes inherent in perception and cognition (see my pages on Foundations for Attention and on Visual Attention for motivation and justification). There are three main classes of mechanisms, each including a number of more specific mechanisms:

Selection

-

• choose a spatio-temporal region of interest

-

• choose which world/task/object/event model is relevant for the current task

-

• select the best fixation point in 3D and the best viewpoint from which to view a scene

-

• select the best interpretation/response

Selection occurs by some decision process over a set of possibilities so that one element of that set is chosen. Winner-Take-All or Soft-Max are two possible mechanisms.

Restriction

-

• restrict the task relevant search space by pruning the irrelevant

-

• consider only the locations or features previously presented as cues

-

• limit the extent of search for task-specified results

Suppression

-

•suppress interference within receptive fields via location/feature surround inhibition

-

•suppress task-irrelevant computations (by location, feature, feature range, or higher level representations)

-

•suppress previously seen locations or objects via inhibition of return

-

Suppression occurs by multiplicatively inhibiting (not necessarily complete suppression) a set of items within one or more representations.

This view of attention is the main distinguishing feature between this model and others, particularly those that are direct descendants of the original 1985 saliency map model of Koch and Ullman, which itself was an attempt to realize the Feature Integration Theory presented in 1980 by Treisman and Gelade (see How is ST Distinct? for more).

The Selective Tuning model - whether for visual attention or more broadly for vision - is Distinct in important ways from other models, both past and present. A final body of support for ST comes from its Predictive power.

We have also conducted a number of Human Experimental Studies supporting the modeling work.

Main Selective Tuning Papers

-

•Tsotsos, J.K., A `Complexity Level' Analysis of Vision, Proceedings of International Conference on Computer Vision: Human and Machine Vision Workshop, London, England,June 1987.

-

•Tsotsos, J., The Complexity of Perceptual Search Tasks, Proc. International Joint Conference on Artificial Intelligence, Detroit, August, 1989, pp1571 - 1577.

-

•Tsotsos, J.K. Analyzing Vision at the Complexity Level, Behavioral and Brain Sciences 13-3, p423 - 445, 1990.

-

•Tsotsos, J.K., Localizing Stimuli in a Sensory Field Using an Inhibitory Attentional Beam, October 1991, RBCV-TR-91-37, Dept. of Computer Science, University of Toronto.

-

•Culhane, S., Tsotsos, J.K., A Prototype for Data-Driven Visual Attention, 11th ICPR, The Hague, August 1992, pp. 36 - 40.

-

•Culhane, S. and Tsotsos, J. An Attentional Prototype for Early Vision, Proceedings of the Second European Conference on Computer Vision, Santa Margherita Ligure, Italy, May 1992, G. Sandini (Ed.), LNCS-Series Vol. 588, Springer Verlag, pages 551-560.

-

•Tsotsos, J.K., An Inhibitory Beam for Attentional Selection, in Spatial Vision in Humans and Robots, ed. by L. Harris and M. Jenkin, p313 - 331, Cambridge University Press 1993.

-

•Tsotsos, J.K., Culhane, S., Wai, W., Lai, Y., Davis, N., Nuflo, F., Modeling visual attention via selective tuning, Artificial Intelligence 78(1-2), p 507 - 547, 1995.

-

•Tsotsos, J.K., Culhane, S., Cutzu, F., From Theoretical Foundations to a Hierarchical Circuit for Selective Attention, Visual Attention and Cortical Circuits, p. 285 – 306, ed. by J. Braun, C. Koch, and J. Davis, MIT Press, 2001.

-

•Tsotsos, J.K., Liu, Y., Martinez-Trujillo, J., Pomplun, M., Simine, E., Zhou, K., Attending to Visual Motion, Computer Vision and Image Understanding, Vol 100, 1-2, p 3 - 40, Oct. 2005.

-

•Rodriguez-Sanchez, A.J., Simine, E., Tsotsos., J.K., Attention And Visual Search, Int. J. Neural Systems, 2007 Aug;17(4):275-88.

-

•Tsotsos, J.K., Rodriguez-Sanchez, A., Rothenstein, A., Simine, E., Different Binding Strategies for the Different Stages of Visual Recognition, Brain Research , 1225, p119-132, 2008.

-

•Rothenstein, A., Rodriguez-Sanchez, A., Simine, E., Tsotsos, J.K., Visual Feature Binding within the Selective Tuning Attention Framework, Int. J. Pattern Recognition and Artificial Intelligence - Special Issue on Brain, Vision and Artificial Intelligence, p861-881, 2008.